In a restaurant on a Tokyo street, a foreign traveler points at the menu, trying to inquire in English: “Hi, just wondering—does this contain any nuts? I’m allergic.” The restaurant employee freezes, unable to understand what the customer is saying. They can only bow and repeat “すみません” (“excuse me”) while nervously gesturing with their hands. The traveler, at a loss for words, is unsure whether the employee has understood. They exchange awkward smiles as tension fills the air. Despite their close proximity, it’s as if they were separated by a thick pane of glass.

Such scenes are daily occurrences in Japan’s convenience stores, restaurants, train stations, and other public places. Each year, as many as 36 million international tourists visit the country. The language barrier is not just a minor inconvenience during travel; it’s gradually become a major pain point in the high-pressure travel industry—affecting not only the tourist experience but also Japan’s broader global competitiveness

This pain point is precisely what the Japanese startup Shisa.ai is determined to solve. Founder Jia Shen and his core team of three people are using AI to change the way people communicate. Together, they’ve created a real-time voice translation product, Chotto.chat. Users simply open their phones and speak naturally in their native language.

Whether they’re in a drugstore, a restaurant, or a taxi, Chotto instantly translates their speech into fluent Japanese, ensuring the conversation feels natural and emotionally resonant. “Speech is better than text at conveying human emotions and tone. Only when communication sounds natural will people feel comfortable speaking up,” says Jia Shen.

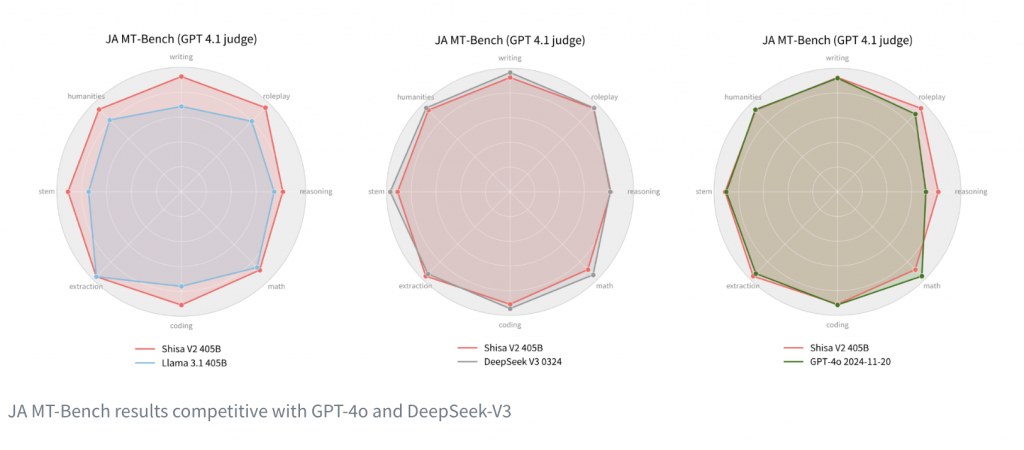

Shisa.ai’s large self-trained Japanese language model, Shisa V2-405B, supports Chotto behind the scenes. Boasting an impressive 405 billion parameters, the model has been released under a community license, making it available for research and noncommercial use, and has become one of the best-performing Japanese language models currently available.

According to the team’s evaluation results, Shisa V2-405B demonstrates capabilities comparable to OpenAI’s GPT-4o and China’s DeepSeek-V3 across multiple Japanese language tasks—including instruction understanding, role-playing conversations, Japanese-English translation, semantic reasoning, and text generation.

Surprisingly, this achievement, which rivals those of tech giants, was created by a “nano team” of just three people, and within one month of the model’s launch, it accumulated over a million downloads on Hugging Face, attracting global attention.

Why Does Japan Need Its Own Large Language Model?

For Jia Shen, these efforts seek to address a structural challenge spanning three dimensions: culture, economy, and national security.

But it all begins with the language itself. Japanese relies heavily on “context.” Tone, relationships, and social cues carry various “subtexts”— implied meanings that Japanese people do not express directly. Jia Shen provides an example: “In Japan, refusals are often not explicitly stated. For instance, when the someone says, ‘That day is not very convenient,’ it sounds polite, but it’s actually a clear rejection.”

Similarly, a phrase like “Aishiteru” has no clear subject or object. Depending on the context, it could mean “I love you,” “I love her,” or even “She loves him.” If large language models cannot understand the broader context, they cannot correctly infer meaning, and this linguistic characteristic poses a significant challenge for AI.

It also makes cross-cultural communication difficult—especially in service industry settings.

In April 2025 alone, Japan welcomed 3.9 million tourists, setting a new monthly record. However, the retail and restaurant industries face unprecedented labor shortages, and the language barrier is causing Japan to miss out on numerous business opportunities.

Looking further ahead, generative AI is rapidly evolving from a business tool into a strategic geopolitical asset. If the United States or China were to restrict the export of GPT models, countries that heavily depend on foundation models developed overseas may face risks they cannot independently address. In this context, having a proprietary model that can understand local language and culture is not just about improving efficiency—it’s about digital sovereignty and national resilience.

Shisa.ai have decided to release their models under a community license, allowing them to be used for research and non-commercial purposes. They believe that language models should not be monopolized by a few tech giants but should serve as basic infrastructure that everyone can share. This is not just a technological breakthrough, but also a declaration of intent: to give more developers and businesses committed to local AI development the opportunity to participate in and strengthen AI that truly understands the Japanese language and culture.

Overcoming Cultural Barriers Through “Voice”

Shisa.ai’s first product is a real-time voice translation system that starts with “voice”—Chotto.chat.

“I’m sure you’ve noticed by now that I speak very quickly!” Jia Shen says with a laugh. He chose to begin with real-time voice translation, creating a system that could keep up with speaking speed and adapt to the natural rhythm of conversation. “Chotto.chat is designed for people like me who speak quickly and need real-time communication.”

Unlike general translation tools, Chotto.chat is specifically optimized for Japanese speaking habits. Whether you speak rapidly, habitually lower your voice in public, or speak softly into your phone, it can recognize and respond naturally in real time, ensuring that conversations aren’t hindered by technology.

“We don’t just do simple translation; we make AI understand tone and context so that it can speak naturally and with emotion like a real person,” Jia Shen explained. “It’s like casting voice actors for a drama. It’s not just about reciting the lines correctly, but about speaking with emotion and depth.”

Although Chotto.chat has not yet officially launched its app, it already attracts over 6,000 users daily, with an average session time of 27 minutes per person. It’s not only useful for shopping and ordering food; it can also be used between couples and friends from different cultures, allowing them to communicate naturally in their native languages.

From Restaurants to Stations: Shisa.ai Becomes the “Invisible Translator” in Business Settings

Beyond everyday conversations, Shisa.ai’s voice technology has quietly entered physical spaces in Japan, becoming an important assistant for welcoming foreign customers.

In restaurants, Shisa.ai serves as a language assistance tool for staff, helping them respond to foreign customers’ inquiries about allergens and supporting new employees as they learn on the job. In retail stores, Shisa.ai handles common issues such as tax exemption rules, size conversions, and return/exchange policies, allowing frontline staff to respond smoothly without interrupting their work.

This kind of real-time support also extends to public spaces. Inside Yokohama Station, Shisa.ai has installed AI kiosks supporting 17 languages, allowing travelers to inquire in real time about restrooms, tickets, and store locations. These kiosks act as “talking information boards,” bridging language and information gaps.

Next, Shisa.ai is planning to extend its voice applications by creating virtual pets that combine chat, reminder, and companionship functions—offering a range of future solutions for Japan’s aging society.

Giving Every Culture the Right to Be Heard

Shisa.ai was founded by three immigrants who chose to build in Japan. They believe AI sovereignty must start with local language and culture—not just to preserve diversity, but to ensure data privacy and national resilience.

CEO Jia Shen co-founded the company with CTO Leonard Lin, who led the development of the Shisa model. Their third team member, Adam Lensenmayer, is a well-known anime translator whose credits include Attack on Titan, Chibi Maruko-chan, Space Brothers, Detective Conan (Movie) and Kaiju No. 8. His attention to tone and linguistic nuance helped the model align more deeply with the subtleties of Japanese communication.

For Jia Shen, the core of Shisa.ai isn’t just about creating “the most powerful AI,” but rather about answering a deeper question: can technology bridge gaps between us and our families, and between us and our culture?

This idea was born out of deep concern for his family.

“My father passed away ten years ago, and my daughter is five years old, so she never met her grandfather,” explains Jia Shen. “But through language models, I can preserve his voice, tone, and life philosophy. In the future, my daughter might be able to talk with her grandfather and listen to the family jokes that have been passed down.”

It’s not just about the preservation of memories but the continuation of culture, or as he calls it, “cultural capture”: recording the voices and emotions that don’t automatically remain on the internet. These include elders’ daily conversations, local dialects, the tonal shifts Gen Z uses on dates, and the cultural memories quietly flowing among groups who don’t go online or post on social media. These subtle yet profound words, if unheard or unpreserved, could vanish in an instant.

Shisa.ai also plans to reach more users and extend voice translation to physical spaces so that everyday environments like train stations, shopping malls, and restaurants can provide “someone who understands what you’re saying.”

After all, the most moving technology isn’t always the most conspicuous. It exists in the moment when a sentence is understood or an emotion is comprehended, quietly exerting its power—and bringing people a little closer together.